Don't leave assumptions up to AI

Assumption Autopsy

Assumption Autopsy

Every startup is built on assumptions. Can you identify the load-bearing belief that broke?

AI is getting frighteningly good at the craft of design.

It can generate screens, flows, copy, research summaries, usability findings, and recommendations on what to fix next. For many teams, this feels like progress. For some, it feels like relief. But there's a trap here.

While AI can execute the work, there's one thing it cannot do reliably. And it happens to be the thing that quietly drives everything else.

AI can generate assumptions. It cannot tell you which ones are real, which ones are risky, or which ones are worth betting the company on.

That distinction matters more than most teams realise.

The cost of wrong beliefs

Design doesn't start with solutions; it starts with beliefs. Every product is built on a foundation of assumptions, whether we name them or not. We assume "this is a real problem," or "this is who we're designing for," or "this trade-off is acceptable."

If those foundational assumptions are wrong, speed doesn't help you. It just gets you to the wrong place faster.

Quibi raised $1.75 billion on the assumption that people wanted premium short-form video for their commutes. The execution was polished. The technology worked. The assumption was wrong. They shut down in six months.

Most product failures aren't execution failures. They're assumption failures. And no amount of craft can rescue a flawed premise.

The design flywheel

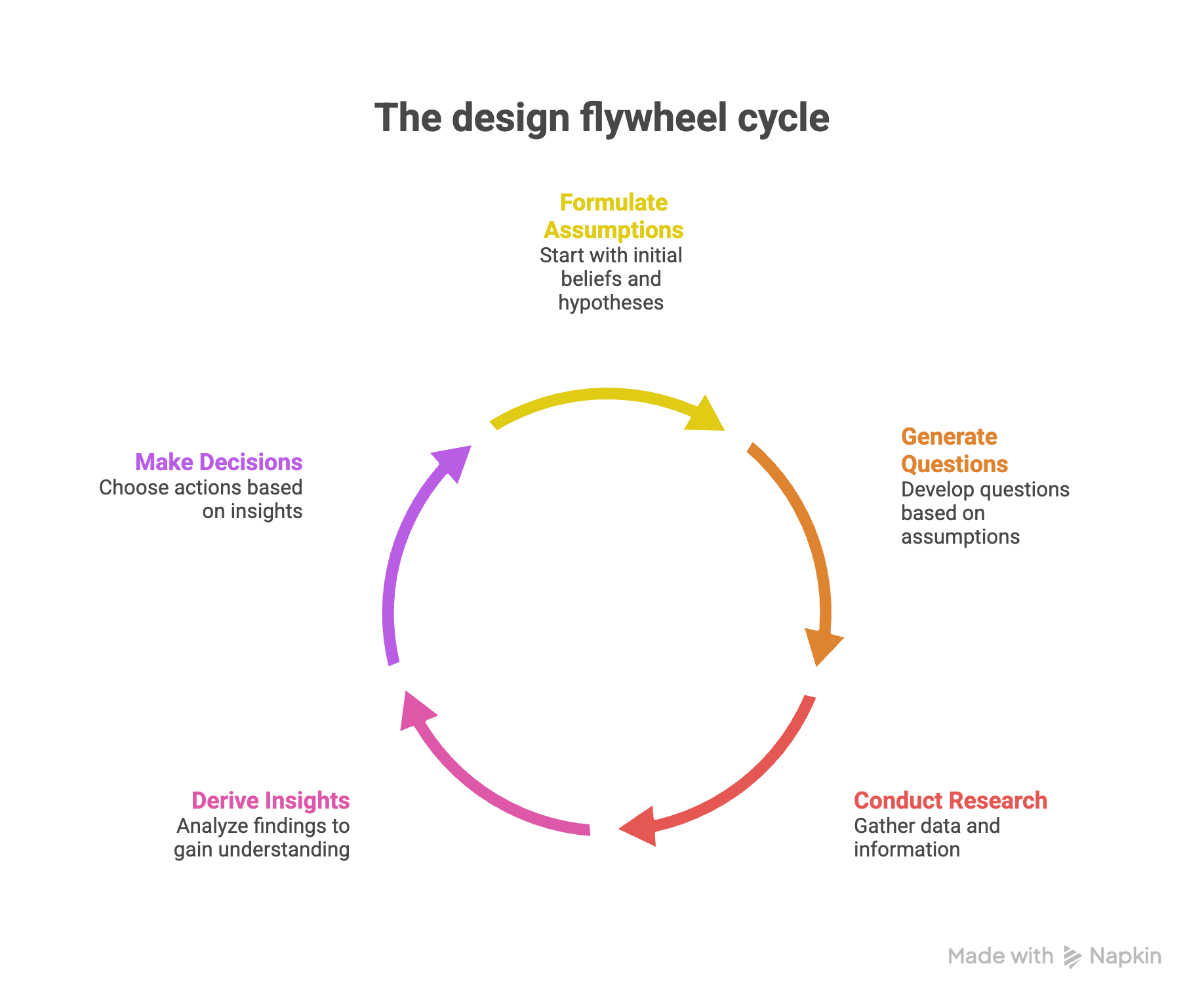

Think of design as a flywheel: Assumptions → Questions → Research → Insights → Decisions → (back to) Assumptions.

Each rotation should build momentum. Good assumptions lead to sharper questions, which produce more useful insights, which inform better decisions, which refine your assumptions for the next pass. The flywheel accelerates.

AI is excellent at the middle of this cycle. It can execute research, synthesise data, and spot patterns faster than we can. But assumptions sit above the flywheel. They determine what questions get asked in the first place, what data gets considered relevant, which patterns get flagged as significant.

If your starting assumptions are flawed, everything downstream inherits that flaw, and each rotation compounds the error. The flywheel spins faster, but in the wrong direction.

You cannot automate your way out of a bad premise.

Likelihood vs. consequence

Here's the part that catches teams off guard: AI is actually very good at generating assumptions. Give it enough context, and it will produce a list of plausible beliefs about your users, your market, your product.

But AI works from precedent. It optimises for likelihood, not consequence.

It can tell you what is probable. It cannot tell you what is load-bearing.

This is where AI research tools can quietly mislead you. When they surface "opportunities," they're optimizing for likelihood, what the data suggests is probable. But calling something an opportunity isn't neutral. It's a judgment. You're assuming it's desirable, that it aligns with your goals, that it's worth the trade-offs.

AI can surface potential paths. It cannot decide which ones should exist. Treat AI-generated "opportunities" as hypotheses, not facts. The decision about what matters, what's worth pursuing, belongs to humans.

Assumptions are judgment calls. They require someone to look at a belief and ask, "If this is wrong, what breaks?" AI can't answer that responsibly because it doesn't have to live with the fallout.

The assumption landscape

And the landscape is broader than most teams realise. Products don't fail because of one bad guess. They fail because of unexamined beliefs across multiple dimensions:

Problem assumptions

Does this problem actually exist, and is it worth solving?

User behaviour assumptions

Will people do what we expect them to do?

Technology assumptions

Can we actually build and maintain this?

Business assumptions

Will the economics work?

Interaction design assumptions

Will users understand how to use it?

Context assumptions

Where and when will people engage with this?

Timing assumptions

Is the market ready, or are we too early?

Integration assumptions

Does this fit into existing tools and workflows?

Scaling assumptions

Will what works now still work at 10x?

Retention assumptions

Will people come back after the first use?

Channel assumptions

How will people discover this?

Value attribution assumptions

Will users credit us for the outcome?

Each of these is a load-bearing belief. Get any of them wrong, and the product wobbles. Get several wrong, and it collapses.

AI can generate plausible answers for all of these. But it cannot tell you which ones deserve scrutiny, which ones carry the most risk, or which ones are quietly undermining everything else. That judgment, the meta-judgment of where to focus your doubt, remains human work.

Assumption guardians

This is why designers and researchers are being pushed upstream.

Execution has become cheap. Screens, components, summaries, even usability findings. None of these are scarce anymore. The center of value has moved. As I wrote in AI ate the design process, what remains valuable is judgment, the ability to know what's worth making.

Designers are shifting towards service design: thinking about full journeys, incentives, and systems rather than just interactions. Researchers are moving into strategic sense-making: not just "what did users say?" but "what should we believe, and how confident should we be?"

This isn't a career pivot for its own sake. It's a response to where the bottleneck has moved. Execution has been automated. Judgment hasn't.

Designers and researchers aren't just makers anymore. They're assumption guardians, the people who protect the product from false certainty. Who surface beliefs deliberately, name them explicitly, frame them as testable bets (the formal term is hypothesis), and own the consequences.

Don't automate the risk

By all means, automate the analysis, the synthesis, the documentation. Let AI handle the middle of the flywheel.

But assumptions must be surfaced deliberately, named explicitly, and owned by humans.

If your role is defined purely by execution, AI will outpace you. But if your role is defined by framing the right problem and owning the decisions that follow, you just became more important, not less.

AI is a velocity tool, not a navigation tool.

Don't hand over the steering wheel.

Hey, quick thought.

If this resonated, I write about this stuff every week — design, AI, and the messy bits in between. No corporate fluff, just what I'm actually thinking about.

Plus, you'll get my free PDF on Design × AI trends